©Disney/Pixar.

July 20, 2017

Written by Leif Pedersen - Edited by Mike Seymour

Piper is the kind of film that elicits disbelief, a film that allows you to admire the beauty of the imagery and yet also marvel at its technical prowess. As with any Pixar film, the focus is on the story, and this is where RenderMan was able to help the artists and the tools development team with the creative edge that the Director needed to tell this remarkable story.

© Disney/Pixar

RenderMan RIS enabled new creative workflows

Piper tells the story of a daring bird who is confronted with a tough problem as it tries to approach it creatively and collaboratively, a fitting parallel for the challenges and solutions the development team faced as they approached the beautiful golden sun-drenched beach of Piper.

-Erik Smitt, Director of Photography

For the first time, this project came from Pixar's tools department, which is in charge of creating the cutting edge technology responsible for the wonderful imagery present in all Pixar films. Piper was also the only short at Pixar which was started in REYES and transitioned into RIS. Development was done very early on with REYES' plausible hybrid-raytracer.

At the time, the team was getting interesting results and traditional shading and lighting methods were beginning to be deployed, such as reliance on displacement maps, shadow maps and point clouds for global illumination and subsurface scattering, but when the team got their hands on RIS they were sold.

"Our initial images with RIS were very promising, the characters and their environments looked integrated, like they belonged in the same world," said Erik Smitt, Director of Photography on Piper, "We no longer had to approach our shots with per-character lighting rigs in order to create cohesion in the shot, this was very creatively liberating, because we could get close to a finished shot much quicker."

-Brett Levin, Technical Supervisor

Houdini Viewport vs Final Image

The team was able to light shots and approach shading in a completely physical manner, so much so, "that the approach to many of the usual technical challenges had to be completely different" he adds. One example was the beach sand. "Traditionally, we would have used displacement maps and shading tricks to accomplish such an intimate and finely detailed beach," said Brett Levin, Technical Supervisor on Piper, "but we decided to see what RIS could do." And they did...the team decided to fill the beach with real grains of sand, all of it...

© Disney/Pixar

Piper simulated and rendered every grain of sand

Every shot in Piper is composed of millions of grains of sand, each one of them around 5000 polygons. No bumps or displacements were used in the grains, just procedurally generated Houdini models. Displacements were the first option for creating the sand, but after many tests, the required displacement detail needed to convey the sand closeups were approximately 36 times the normal displacement map resolution used in production, which made displacements inefficient and started to make sand instancing a viable solution.

© Disney/Pixar

Each sand grain was modeled like the real thing

The sand dunes were sculpted in Mudbox and the resulting displaced mesh drove the sand generation in Houdini using a Poisson Distribution model. The resulting sand grains were simulated in Houdini's grain solver which created a fine layer of sand on top of the mesh, this result was driven by a combination of culling techniques including camera frustum, facing angles and distance, creating a variance of dense to coarse patches of sand for optimum efficiency.

© Disney/Pixar

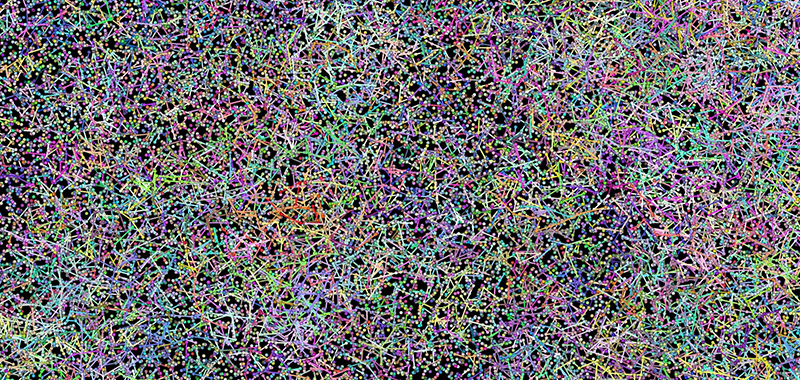

Poisson Distribution

The variety of sand was driven by RenderMan Primvars in order to drive shading variance and dry-to-wet shading parameters, this gave the shading team great control with only 12 grains of sand. The grains were populated using geo instancing and point clouds, both data types handled via Pixar's USD (Universal Scene Description), now an open source format, which allowed for the efficient description of very complex scenes.

© Disney/Pixar

"Rendering such large data sets was going to be a challenge, but we were able to leverage instancing very successfully, with some scenes using a ray depth of 128, which would have been impossible with previous technology," said Brett Levin. This makes the renders incredibly lifelike, but traditionally very resource intensive, yet RIS was able to handle the enormous computational needs out-of-the-box. "We were able to try new and inventive ways to problem solve a shot in a physical way, trusting in RenderMan's pathtracing technology, which we would have never attempted before RIS."

© Disney/Pixar

The Sand's Natural Luminance was Simulated by Light Transport

The color of the sand was taken from an albedo palette generated from the beach on an overcast day, from which they made many types of shaders, varying from shell, stone, and refractive glass. These also had to be wet, dry and have varying degrees of tints and shades in order to simulate the billions of grains of sand convincingly.

© Disney/Pixar

Early sand look development - CG vs Photo

Another challenge were Mach bands, which is an optical illusion that exaggerates subtle changes in contrast when the values come into contact with one another, thus creating unpleasing sand images. The team created a custom dithering solution for this, making the final images feel much more natural.

-Erik Smitt, Director of Photography

Depending on the shoreline, sand can behave in many different ways. Creating these differing sand types was a nice challenge. The team ultimately categorized three distinct types: Wet, Moist, and Dry.

© Disney/Pixar

Sand Wetness Types

Explicit constraint networks were created to give the sand grains a level of stickiness, yet with the necessary breakable constraints to allow for a crumbling effect. After manipulating the grain solver in Houdini, the team was able to separate these subtle differences in moisture into art-directable states which could be simulated and animated with predictable results.

Sand Tension and Clumping

The water and foam used Houdini's FLIP solver. For timing, the team animated simple wave shapes, from which they extracted the leading edge curve and encoded direction. They then built the FX wave shapes and adjusted the thickness based on flow. From there, they sourced the points and ran a low-res FLIP simulations. Lastly, a frustum-padded area is used to run high-res FLIP simulations using velocities from low-res pass. Sometimes retiming of waves was needed for artistic control.

© Disney/Pixar

Water Simulation Breakdown

The foam shading was done with a hybrid volume/thin surface shader, which was rendered with over 100 ray depth bounces. This gave the foam particles the required refractive luminance to realistically convey water bubbles. "RenderMan loved these challenges. We were able to rely purely on light transport to convey such realism in the foam and sand. RIS was doing really well with the incredibly demanding tasks," said Erik Smitt.

© Disney/Pixar

Foam Simulation Breakdown

Several in-house solutions for bubble interaction and popping were used, such as GIN, Pixar's system for creating fields in 3D, as well as procedural foam patterns. These tiny bubbles were then carried with the water surface velocity and used a power-law distribution function for creation. Density was based on particle proximity and in turn, it was used to drive foam popping and stacking.

© Disney/Pixar

Bubble Breakdown - GIN

Another great shading trick was used to work around the inherent issues with liquid simulations and water tension, causing an effect called Meniscus, where an unwanted "lip" is formed where the water meets the beach. To work around this, the team duplicated the entire beach geometry and used a dynamic IOR blend to transition between the wet and moist sand. This got rid of the imprecision and created natural lapping water.

© Disney/Pixar

Piper simulated and rendered every grain of sand

Combined with procedurally generated water runoff in Houdini, the team was able to use the resulting ripple patterns as image based displacements in Katana, giving subtle touches to a beautiful looking beach shore.

© Disney/Pixar

Houdini to Katana Water Ripples

Piper was heavily influenced by macro photography and originally the team was going to use Depth of Field in Nuke with a Deep Compositing workflow but realized that RenderMan was handling in-render DOF incredibly well and with an accuracy that was hard to match in post. For some exaggerated DOF shots, a combination technique was used, where they re-projected the renders onto USD geometry and added extra blur with Deep Image Data, creating a more stylized look.

© Disney/Pixar

Piper Was Heavily Influenced by Macro Photography

Finally, to get rid of pathtracing noise, the development team was happy to use the new RenderMan Denoise. Traditionally, refractive surfaces and heavy DOF requires a lot of additional sampling to clean up entirely, but the Denoiser allowed the team to really push these techniques without having to be afraid of unwanted noise or impossibly long render times. They also leveraged integrator clamps and LPE filtering on caustic paths to kill 'fireflies'.

© Disney/Pixar

Piper Was Heavily Influenced by Macro Photography

In the end, Piper was able to push RenderMan to new physical shading and lighting standards, which helped carry its new RIS technology to unprecedented heights, paving the way for new and exciting times for physical workflows at Pixar.